What is quality assurance?

The role of QA exists to prevent problems in an application so that users don't encounter bugs and crashes. Quality Assurance, referred to simply as QA, is a systematic process used to determine whether a product meets specifications. An individual in this role can have many titles including QA, QA engineer or QA analyst, Software engineer in test, or Tester. Throughout this course, you will hear me use these titles interchangeably. An individual in the QA role constantly questions parts of the software development process to ensure the team is building the right product and building it correctly. A QA's primary goal should be to help their team move quickly with confidence. They establish and maintain standards for how to best test a software product. Having solid software testing practices is a huge asset to any team in order to produce a well functioning application.

How to ensure quality

Good quality results in a software product that is built correctly, as specified, and works reliably. Quality can be ensured across the code and influenced by process. It can be measured in various ways. Perhaps by customer happiness, annual revenue, or how well the application functions over time. Every quality software product has a team behind it that's responsible for its success. The team is composed of multiple roles. It is up to all of them to work together to produce high-quality software. It starts by having clear specifications. Then the specifications can be implemented in code, and the code is tested thoroughly to ensure the application works as intended. After the code is released, users will start to use the application and provide feedback. There isn't a quick and easy solution for how to ensure quality. Instead, it's an ongoing process that the whole team needs to be invested in. And to maintain quality, it's necessary to continue to iterate and improve on processes in order to have the best working software.

Role of QA

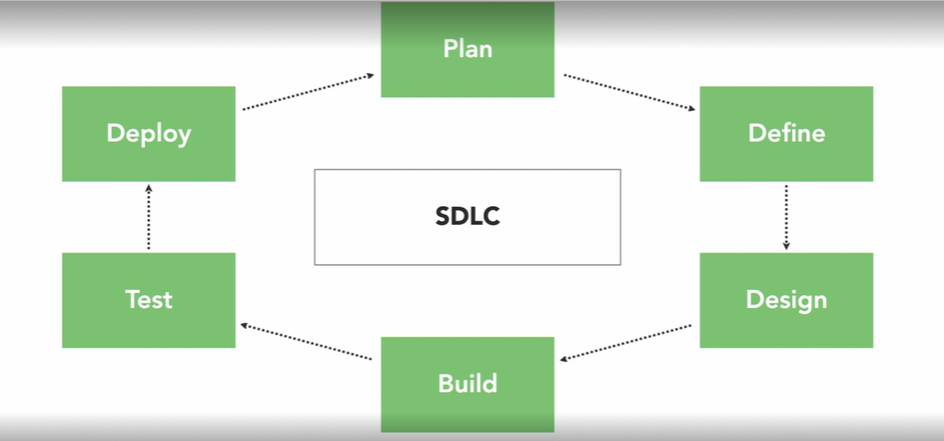

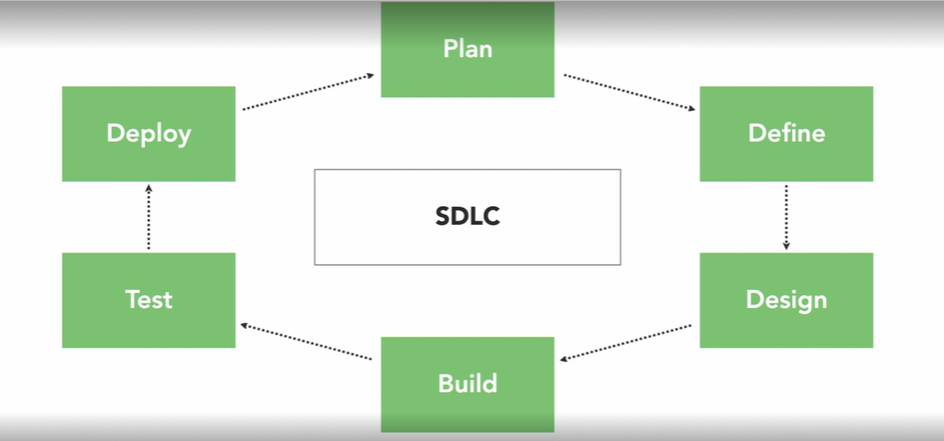

1) Get involved throughout the SDLC

The software development lifecycle, referred to as the SDLC, is a process that processes high-quality software in the shortest amount of time. The SDLC includes detailed steps for how to effectively develop, change, and maintain a software system, and is typically broken down into six parts: The steps are linear and do go in a sequential order, but what's more important to understand is that it's a cycle that each feature should iterate through. Traditionally, a QA has only gotten involved during the testing phase, but it's proven really useful for a tester to get involved as early and as often as they can to ensure quality throughout the entire development process. During the planning phase, or identify use cases of the feature. how to best mitigate risk or look for alternate solutions. When defining a feature, a QA can help write specifications or acceptance criteria and help decide what's in scope and what's out of scope. At this phase, a QA can also begin to write a test strategy and test scenarios. And also here the team can define when the product will be released and what metrics can be used to measure success. In the design and build phases, a QA can solidify test scenarios and get feedback on them from the team. They can begin to manually test the scenarios defined and script automated test as well. In the test phase, manual and automated tests are completed. This usually happens with the help and collaboration of developers. A QA can also work with a business team member to determine if the functionality will be accepted into the release. When it's time to do the deploy of the product, a QA is usually involved in the release process and helps to validate the release build. After the release, a QA will verify the functionality in production is still working as expected. or one development sprint, the same cycle can start all over again in another iteration. By getting involved outside of just the testing phase, By getting involved outside of just the testing phase, the product will have quality built into it at all stages. the product will have quality built into it at all stages. This will ensure a successful release This will ensure a successful release and a great experience for users. and a great experience for users.

2) Collaborate with the team

It is so valuable to collaborate with cross-functional teammates in each of the phases of the SDLC. Collaboration between teammates leads to a better understanding of each others' roles and a better outcome for the product. and in return help others develop a quality mindset. In a typical software delivery team, there are developers, designer, a tester, and product manager or business analyst. The team works together throughout the SDLC to deliver a quality product. get involved within different phases. The plan, define, and design phase can include a representative from each role. The build and test phase includes a developer, a tester, and a designer. The deploy phase includes a developer, a tester, and a product manager usually has the final sign off. A QA typically collaborates with a business team member to help determine acceptance criteria and scope for a future or story. A business team member can also work with QA to provide sign off on stories and get feedback about test scenarios and what to automate. When working with design, a QA provides feedback on mocks, prototypes, or the product itself after it has been deployed. Developers and QAs pair together to write test and validate functionality. Typically, the more collaboration, the better. Get creative and find the best ways to collaborate with your teammates across different phases of the SDLC. This will allow a QA to get more insight on how to do thorough testing, and will keep a team more in sync by creating a shared vision by creating a shared vision by building trust and accountability. by building trust and accountability.

3) Set expectations and goals

I have shared how varied the role of a QA can be. Keeping that in mind, when you join any new team or project, it will be necessary to set clear expectations, and measurable goals. It's important to ask questions, to understand your role, share your strengths, and identify areas in which you want to grow. This way, you will come into a situation being fully aware of what to expect. It's also important to build a relationship with the team. Get to know each of your teammates, their role, and build trust with one another. Spend time collaborating, and also schedule time for one-on-one sessions. Some individuals may have never worked with a software tester before. Some have a misunderstanding about how the role works, and what it entails. Getting to know your teammates will spark ideas about how to best collaborate cross-functionally, and support one another. will make you more accountable, because others will be aware of your goals, and can help you to achieve them. As you get up to speed, and start contributing to the project, make sure to regularly check in with your teammates. Constantly communicate what you are working on to the rest of the team, and don't be afraid to speak up if you see something wrong. If you need help, find someone who can answer your questions. Over time, ask for feedback to understand if you are meeting expectations, and give others feedback about your experience has been working with them. Always try to give candid feedback, and align the feedback with how an individual is meeting up to their goals. When I was a consultant, I had the opportunity to work with many different clients with unique needs. It was necessary that I be able to set clear expectations with my team early on, especially if I was only going to be working on a project for a few months, and didn't want to waste any time getting off on the right foot. Before starting a project, I tried to get as much information as I could, by talking to the team and understanding their challenges. Then I would take some time to observe and assess the situation. That allowed me to see first-hand what would work best for that project, and which skills I would need to apply to make a change. Once I had a clear plan of approach for testing, I shared that with the team, With that said, I would get to work to collaborate with the team, to fix some of the challenges Every few weeks or so going forward, and see what I was doing well, and how I could improve. If you're not already doing any of this, I would recommend starting now. In addition, one-on-one meetings, or retrospective sessions, are great opportunities to share feedback, and talk about how well expectations are being met. If you want to be a successful QA, If you want to be a successful QA, then setting expectations, then setting expectations, and checking in on them regularly, and checking in on them regularly, will give you a great start. will give you a great start.

Test Planning

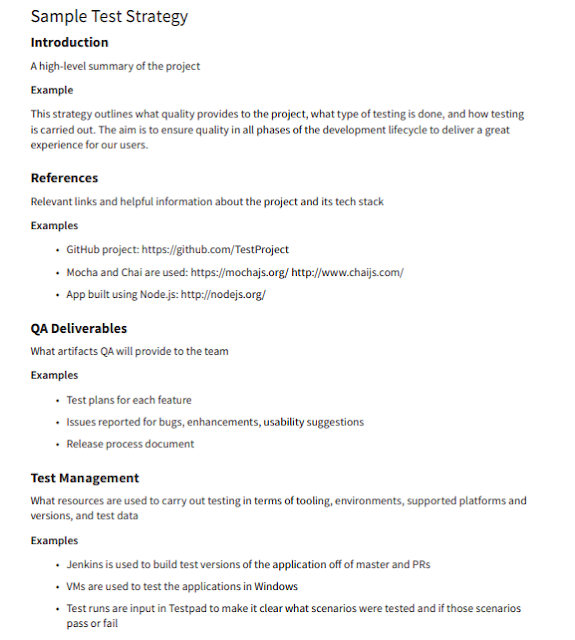

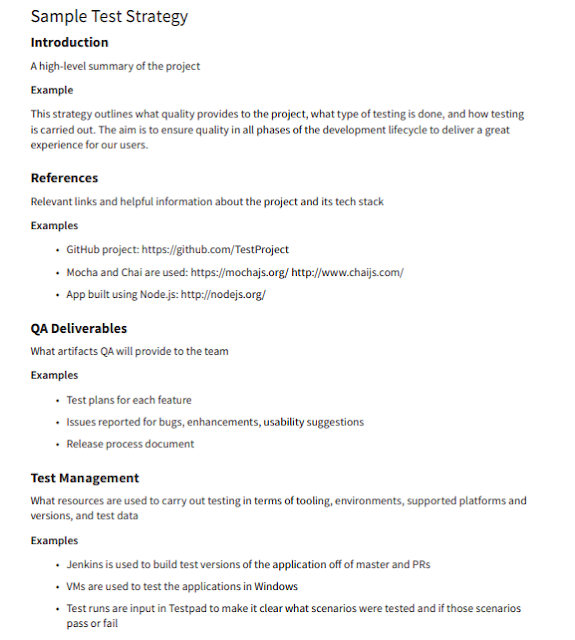

1) Create a test strategy

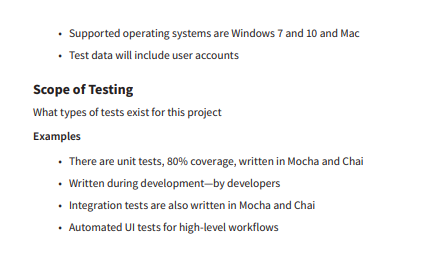

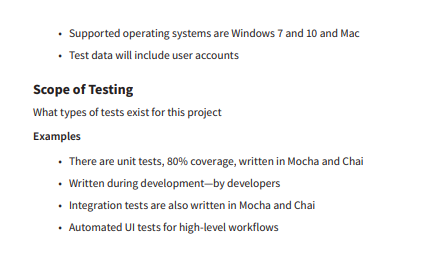

For each project, a QA engineer will create a test strategy, which describes how a product will be tested. A test strategy is useful so that anyone can read and understand the scope of testing clearly. I'll walk through a template to show what details I include in a test strategy. This template can be reused and modified for any project. First up is the introduction. The introduction is a high-level summary of the project. An example introduction can be, this strategy outlines what quality provides to the project, what type of testing is done, and how testing is carried out. The aim is to ensure quality in all phases of the development lifecycle to deliver a great experience for our users. Next up are references. This will include any relevant links for their project, including their project's repository and tools used in the tech stack. As an example here, and describe the application teck stack and tools being used for testing. Next up is QA deliverables. This is what a QA engineer will provide to the team. The first deliverable is this quality strategy, the high-level guide of how quality will be maintained on our project. Next there are test plans for each feature which lists test scenarios. Then there are issues reported. Examples of issues reported include bugs, enhancements, and usability improvements. Another deliverable is a release process document. This describes the steps to do a release to production. Moving along in the test strategy, there is the test management section to describe what resources are needed to carry out testing. First up is tooling. An example of tooling is, Jenkins is used to build test versions of the application and virtual machines are used to test the application in Windows. Then there are test environments which describe what environments are needed for exploratory and manual testing. An example of test environments is that manual testing will be done in environments created on-demand. Automation testing is run against a dedicated machine and across Mac and Windows using Chrome. Then, there are supported platforms and versions. For example, Mac and Windows are the supported platforms and each major browser is tested against them. Lastly in this section is test data. A simple example of test data that will be needed is test accounts to access the application. Here it can be helpful to create a table of accounts so that they can be referenced later when testing. is the scope of testing. This describes what types of tests exist for this project. Examples of test include unit, integration, functional, regression, and so on. For each test type use, specify how many there are or how much of the code they cover. Also identify which tools are used to write each type and who owns them. Those five sections in the test strategy do a good job at setting expectations for what a QA will provide, what resources are needed, how testing will be carried out, and which test will be performed. I'll include the test strategy as a handout for this video. Work with your team to create Work with your team to create a test strategy document for your project. a test strategy document for your project.

2) Make a test plan

- Each feature should have an associated test plan. and edge case scenarios to describe how a feature should function, examining it from many angles. During the definition phase, a QA engineer will start to create a test plan for the feature. This way there is ample time for it to be completed, reviewed, and executed before the test plan is over. The test plans I create have four main parts. First there is the scenario, which explains the steps or action that will be executed. Then there is the expected result, which describes what the outcome will be for a given action. Next there is the latest result, and last, there is a field for whether or not the scenario will be automated. This will either be true or false. There is one unique scenario per row. Let's go through an example now. Say I'm working on a new feature for user management. This feature provides the ability to add and delete users, or edit a user's profile. If I focus on each of these user management areas, I can brainstorm a long list of scenarios. I'll first dive into defining scenarios for adding a user. Say I want to click a button to add a new user. Once I click, the expected result will be that the create user modal opens and has two required fields for username and email. There is a create user button that becomes enabled once the fields are populated. After the feature is implemented, I'll check if this passes or fails based on the expected result. It's likely that each scenario will be checked multiple times or by different individuals. So every time the check is done, the latest result can be updated. The last part of the scenario is whether it should be automated. Adding a new user sounds like an important feature, and likely something we're going to want to know always works. Therefore, this is a good candidate for automation. After defining one scenario, I can move onto other scenarios for adding a user, and then editing and deleting users. Once a test plan is complete, I end up with anywhere from 10 to 100 scenarios. The number doesn't really matter so much. What's more important is that the test plan identifies all the possible actions the user can take when using a feature. When a test plan is complete, have a review meeting Send out the test plan to attendees to asynchronously read through the scenarios and identify gaps or fill uncertainties. Once all the feedback has been addressed and the test plan is solidified, it can then be referred to later when testing individual components or stories of the feature as they become complete. In addition to reviewing the test plan as a group, I've seen great success when a team does group or mob testing together for 30 minutes to run through each scenario in the test plan. This happens in advance of the release and can give team members the chance to use the feature across different browsers, platforms, or devices. across different browsers, platforms, or devices. A test plan is a living document that should be updated A test plan is a living document that should be updated as scenarios change and as tests are executed. as scenarios change and as tests are executed. It is a valuable artifact to have for every feature It is a valuable artifact to have for every feature of an application. of an application.

3) Write acceptance criteria

- It can be difficult to take a feature and determine how to implement it as a whole. User stories help break down a feature into manageable sized pieces of functionality. They typically have acceptance criteria which specify what work is included as part of that particular slice of the feature. Acceptance criteria or AC for short are conditions that a software product must satisfy to be accepted by a stakeholder. Acceptance criteria clearly define how each feature should look and function in detail. They're an extension of scenarios to find and test plans. An acceptance criteria allow a developer to know what to implement code for, a business analyst to know what scope the story covers and a QA to know which scenarios to test. Acceptance criteria follow a specific format. They always start with a given. This is a precondition or beginning state Next is the when. This describes the input or action of the scenario. The final part is the then. This describes the expected outcome of the scenario. Let's look at an example of a user story which will provide the functionality to add an item to a shopping cart. One scenario of acceptance criteria might be given I am viewing an item, when I press the Add to Cart button, then the item is added to the cart. Other acceptance criteria might define an error scenario. Let's take a for example adding an item to a cart that is sold out. Given I am viewing an item that is sold out, when I press the Add to Cart button, then the item is not added to the cart. And I see a message that the item is out of stock. In this acceptance criteria I also add an and statement to describe an additional expected outcome for this scenario. This can be done for any part of the acceptance criteria. it's pretty straightforward to define an acceptance criteria especially if scenarios have already been defined in a test plan. This is just another way to ensure quality and know that an application and know that an application is being built exactly as specified. is being built exactly as specified.

4) Identify when testing is complete

It can take some time to work through all the activities within each phase of the software delivery life cycle. At some points of the SDLC, it can be unclear to know when you have done enough to move onto the next phase. This is definitely true for the testing phase. To take ambiguity out of the equation, it helps to determine what steps need to be taken to say that each story has been adequately tested. To do this, you need to define what it means for work to be done. The definition of done will specify what actions need to be completed before a piece of functionality is ready to be released to the public. The team should decide what will be included in a definition of done, so that they have a shared understanding and abide by it. Typically, this definition includes manual of the acceptance criteria from product, design, and QA. Work will start moving fast on projects and it can be easy to cut corners when the team is in a rush and trying to get work completed before a release. There have been countless times when a release deadline is fastly approaching and teammates have tried to rush me through the testing phase by suggesting things like delaying automation until after the release. When a standard is setup, the team is held accountable to follow the steps for each story to get proper sign off. While having an explicit definition of done might sound like a lot to do for every user story, it's just one final step to add some extra confidence. Since there were test scenarios, an acceptance criteria defined and testing throughout the process, After all, it's better to spend a little extra time making sure things are right making sure things are right than rushing to fix a nasty bug that's discovered too late. than rushing to fix a nasty bug that's discovered too late.

Types of testing QA

Box testing

One way to model testing is to think about it in terms of a box. that can be performed so that there is a holistic approach to testing an application. First, there is black box testing, which means that the box is completely concealed and it is not possible to see inside of it. Each test scenario here examines the product from the outside. It allows input to the box and gets output from the box. This means that there is no knowledge needed about the internals of the application, such as what the source code is doing or how the system is working. The focus with black box testing is to perform an action in the user interface and expect a certain result from that action. Black box tests include manual testing and UI automation testing, both of which will help uncover issues with functionality and usability. QA engineers are responsible for this level of testing. Next up is gray box testing. Here the box is semi-transparent. Test scenarios here examine the interaction between the outside and inside of the box. It requires QA engineers to have a deeper understanding of the application. Gray box tests include integration testing, which examines how components of the application work together. They can trigger some action in the UI and see how the code responds. Typically QA engineers and developers are responsible for these level of test. And lastly, there is white box testing. Here the box is completely transparent and focuses on the internals of the application and what is happening at the code or system level. It tests specific functionalities in the code and verifies the result. White box testing includes unit and system testing. Developers are responsible for these type of tests and write them alongside development. Take for example a shopping cart on an e-commerce web application, items can be added and removed from the shopping cart and a total is displayed based on the quantity of items in the cart. For this feature I'll want to think about the type of testing that can be done to ensure quality and confidence that the application works as expected. At the white box level of testing I'm starting from inside the box. Examples of test scenarios here that can be executed at this level are that the quantity can be increased and decreased successfully, and the calculation of items is performed correctly. These tests are written by calling functions in the code that can increase, decrease and calculate the total. Different values are provided to the functions and expect an exact result to be returned. At the gray box level of testing and the results a server or database returned. I want to know that the box as a whole works well rather than one specific component. A test at this level I might write would be to add an item to the cart and confirm form the API response that the item was added and the cart price was updated. Tests here start from the client, which sends a request to the server, and the server responds with details which I can verify. For black box testing in this example I'm focusing on what input I provide to the box and what output I receive. An example of a test here is to add an item to the cart using the UI and see the cart quantity and price update. To write this level of test I manipulate web elements in a browser to click and add items to the cart and navigate to the cart. I can also get the value of web elements and confirm the output is what I expect. and confirm the output is what I expect. So that's how you can model testing as a box. The whole idea is about looking at a box, The whole idea is about looking at a box, or whatever application is under test from multiple angles to provide thorough test coverage. from multiple angles to provide thorough test coverage.

Manual testing

Manual testing follows the steps as a user performing workflows in the application. The goal is to uncover any issues in the functionality and usability. Before performing manual testing know what scenarios you want to cover. You will have to understand your customers and identify both their typical and nontypical use cases. Testing at this level is done in the test phase Manual testing is a type of black box testing. Let's look at an example for an airline site where customers search and buy flights or check their flight status. I will focus specifically on the feature for searching for flights. First, I'll take some time to brainstorm scenarios that an user can perform while searching for flights. All the scenarios I brainstorm will be added to a test plan for this feature. I'll think about the Happy Path Scenarios which are scenarios that have successful results. This includes searching for valid one-way flights and round-trip flights and getting a list of flights available. Then I'll think about Sad Path Scenarios which are scenarios that return errors or do not have results. This includes scenarios such as searching for the same city and destination, searching for invalid routes, and searching for routes that do not operate on certain days. Then I'll write down scenarios into a test plan spreadsheet specifying the scenario and expected result. Here I can even get down to a more granular level for the scenario by specifying the origin airport, destination airport, departure date, and return date. I can use this test data to execute the test scenarios. This isn't necessary but can be helpful so that there are specific examples of data to see the results of the scenarios. Having this granular level of data is especially helpful for automation, which we will take a closer look at later. Once a feature is ready to be tested, I will refer back to my plan to manually test each scenario specified and mark whether they passed or failed in the latest result column. Any failing scenarios will need to be addressed and fixed before the feature will be considered done and ready for release. and ready for release.

UI automation testing

- UI automation is like manual testing but uses a script to automate test scenarios. The benefit of UI automation is that scenarios can be executed repeatedly by running a test script. This will help catch regressions and other oddities much quicker than when done manually. Another benefit is that the scripts can be run on any platform or browser, which is great because it will emulate the interactions of users and their platforms and browser combinations. UI automation tests can be started in the build phase of the SDLC and then completed after the functionality has been developed during the test phase. It is a type of black box testing because it provides input to the UI of an application and expects particular data returned as a result. To get started I refer to the test plan for the feature at hand and determine which scenarios will be automated. I recommend only automating the most important workflows and not every single scenario, taking anywhere from a few seconds and up to a minute to complete. As the test project will continue to grow over time the more UI automation that exists the longer it will take to execute all the scenarios. Looking at the test plan for the flight search feature I'll decide to automate the first three scenarios, because if any of these fail I can assume in automating the sad path cases. After deciding what to automate I will start writing a test script to search for flights. Each scenario will be one test. And in each test I will write code to drive action in my browser by simulating entering flight data including origin, destination and flight date. I can then send actions to the browser, depress the search button, Luckily I already have some sample routes I've defined in my test plan, and I will use the same city data and trip dates in my automation test as well. Once the test script is complete make sure it runs reliably and runs often make sure it runs reliably and runs often so that any irregularities in the applications interface so that any irregularities in the applications interface can be caught and corrected. can be caught and corrected.

Integration testing

- With the surface level of the application tested by manual and UI automation, a QA engineer might want to move on to test the application from another perspective. Integration testing focuses on the interaction between components at lower layers of the application. This level of testing covers similar scenarios as we've seen with manual and UI automation testing, but doesn't look at what's happening at the UI level as a result. Instead, integration tests see how the system reacts to certain actions. Integration tests have some knowledge of how the system works internally, and because of that they are a type of gray box testing. QAs or developers usually write these tests during the build and test phase. Take for example the scenario of searching for a one way flight. Here we can send a request to search for a flight providing the flight parameters to the server directly instead of performing the action from the UI. We'll then wait for the server to send a response to the request and can confirm that the API returns information we expect, such as a successful This type of integration tests the interaction between the application and the server to confirm that the right information is sent and received. If the request returns the wrong status code, or other incorrect flight data, we know that there is a problem with the flight search feature. The benefits are it can catch issues with functionality and can be run in a matter of seconds, meaning we can write more integration tests than UI automation. QA engineers should gain familiarity with integration testing and look for opportunities to implement it for each application feature. to implement it for each application feature.

Performance testing

- Performance testing is done to benchmark how a system performs under load. It will help ensure that an application can scale over time and use. because we test how the system operates under certain stress but don't have visibility into what's going on inside the system. While manual, UI automation, and integration testing are the most common for software projects, performance testing is also very valuable. There are a few different types of performance testing Load testing checks the application's ability to perform under anticipated user loads. The objective is to identify the maximum operating capacity of an application by observing when bottlenecks occur. If I focus on the Search Flights feature, this sends a request to look for flights and returns all results found. I'll want to set up a test to simulate many users simultaneously searching for flights. I can use software that generates thousands of different sessions of flight searches. While the system is under load, I'll want to monitor how long the requests take to complete, and identify when spikes or points of long load time, start to occur in the application. There is also endurance testing. Endurance testing, sometimes referred to as soak testing, is done to make sure the application can handle the expected load over a long period of time. This involves simulating many flight search requests and monitoring the system to see if performance degrades. The goal of endurance testing is to check for system problems such as memory leaks. It is similar to load testing but lasts much longer and monitors unusual behavior that can occur in the application or database. And then there is stress testing. Stress testing involves testing an application

Security testing

- As you know, people don't always use the internet for good. or find ways to bring applications down. Security testing is performed to reveal flaws or vulnerabilities that can be exposed by users. Manual UI automation, an integration test, focus more on functionality and confirming it works as expected. Security testing instead looks to expose problems in the application that can either cause it to behave in unexpected ways or stop it from working. Potential problems can include loss of customer data and trust, decline in revenue, and website downtime. There are so many different ways to approach security testing because they are so many ways someone could try to hack an application. SQL Injection is one of the most common types of attacks used by hackers to insert SQL database statements into any text field. This can expose critical information and allow the system to be manipulated. Thinking about the search flight feature, I could use SQL Injection on different fields in the search flight page and try to inject data that will manipulate flight data. I'll want to test where SQL can be injected I'll validate the values received before passing the query to the database. Another common and harmful type of attack This type of attack tries to take down making it inaccessible to users. In terms of flight search, it can be possible to use bots to flood the server with traffic, searching for a large number of flights, or try to send information that will crash a server. To stop a DoS attack, tools can be used to identify requests that are likely coming from bots and eliminate that traffic.

Bug Reporting

Identify bugs

Selecting transcript lines in this section will navigate to timestamp in the video

- When an application is built perfectly, there will be no bugs. But as we know, humans are error-prone, and there's no such thing as a perfect software application. Bugs are inevitable. They occur when the system does not work as designed or specified, and result in incorrect or unexpected behavior in an application. when Grace Hopper was working on a relay calculator and operations were not completing because a moth was physically stuck in the computer. Removing the moth fixed the problems and operations started completing successfully again. Examples of bugs can be when a vending machine gives the wrong item. Perhaps I selected A5 but the item from B5 was dispensed instead. Or even worse, nothing dispenses at all. Another example of a bug can be when an ecommerce website does not calculate the right sales tax or total. Bugs are versatile. They can be low impact or high impact, they can affect many users or just a handful of users, they can be constantly reproducible or not, and some bugs even go away and come back later. The only constant with bugs It will be necessary to determine how to manage bugs for a project how to manage bugs for a project so that the application can continue to run smoothly. so that the application can continue to run smoothly.

Report bugs

- Once a bug is identified it should be reported right away. Use the reporting system to create and manage bug reports. Bug reporting systems make it easy to do things like report, triage, view, assign, and close bugs. There are an abundance of options available. Some focus specifically on bug management while others provide a variety of services to manage a software project. Some popular options include Jira, Rally, GitHub, and Bugzilla to name a few. I recommend managing bugs within the same software you use to manage your project. Otherwise pick a tool specifically for bugs if that suits your needs best. For example, if your team uses Jira to track features, user stories, and tasks, then bugs can be tracked there as well. With Jira, there are many attributes that can not only describe the bug but also assign a priority, status, or owner to the issue. Other systems like GitHub have fewer attributes but allow you to put an abundance of information in the description using markdown to style the body, and allow labels to be attached to the bug to provide extra information. All bug reporting systems are different. At a minimum, they allow reporters to thoroughly describe the bug. It's also beneficial to be able to categorize bugs so that they become easy to find and analyze. Choose a bug reporting system to accurately report and manage bugs. When reporting bugs, it's necessary to report details about the bug and any other details that will be helpful when reviewing and fixing the bug. A bug report should include details like the name of the bug, a description with steps to reproduce it, an expected and actual result, a picture, video or screen shot, the browser or software version, and a log when applicable. Let's look at an example bug report for an eCommerce application where items are not added to the cart The steps to reproduce are to go to the application home page and select first item and choose add to cart. The expected result is that when I go to the cart, I can see the item that I selected has been added. The actual result is that when I go to the cart there are no items. I'll also record and include a GIF which clearly shows the steps to reproduce the bug and see the problem. And then I'll check in my browser console to see if there were any helpful logs I can find and attach. Lastly, I'll tag an individual or team to look into the bug, give it a priority of high, and a status of ready for development. so that all fields are presented to the bug reporter to provide thorough detail about the issue they found. This makes all bug reports follow a similar structure This makes all bug reports follow a similar structure with enough information for anyone with enough information for anyone who reads the report to understand. who reads the report to understand.

Triage bugs

- You will want to regularly go through and review open bugs with representatives from development, business, and QA. Start with and prioritize bugs and identify the severity and priority of each one. Severity is based on how impactful the bug is to the business, and priority is based on how fast the bug should be fixed. Let me show you what this looks like so you can better visualize it. Severity and priority can be plotted on a basic graph. Let severity be on the x-axis and priority on the y-axis. In the lower left hand quadrant, there is low severity and low priority. These types of bugs only affect a minimal amount of customers and don't affect major workflows. This level of bug isn't very impactful. In the upper left hand quadrant, there's low severity and high priority. Bugs here are important to fix, While the bug might be very visible, it is not preventing users from completing any workflow. For example, on an e-commerce site, during the checkout, there might be a glaring UI bug that breaks some HTML. While it is distracting, it is not preventing users from purchasing items. In the lower right hand quadrant, there is high severity and low priority. Bugs here cause major problems, but only under some circumstances. using an outdated operating system such as Windows 7, In the upper right hand quadrant, there is high severity and high priority. Bugs here are major problems that affect most customers. Bugs at this level indicate service disruption and cause the application to be unusable in some workflows. For example, perhaps users are unable to purchase any items. This is frustrating for users and equally frustrating for the business because they can't make any money. Using this matrix will help decide how to best order bugs to be fixed. You can then classify bugs as level one through four, or high, medium, low. After all new bugs are prioritized, you can get to work on fixing them in order of importance, you can get to work on fixing them in order of importance, and don't forget to set up and don't forget to set up a regularly occurring meeting to triage bugs. a regularly occurring meeting to triage bugs.

Communicate bugs to the team

- After bugs have been prioritized, communicate details about the status of bugs with the team. Share things like the top priority bugs, Listen to the team to reach consensus that bugs have been prioritized correctly. Clarify the effort and time it will take to fix bugs, and identify who will be able to fix them. To communicate the status of bugs, it can also be nice to have a bug specific project board. The project board can include columns for new, ready for development, in progress, QA, and done. Place box in the appropriate column and keep the status updated. You can also capture analytics about your bugs such as the amount open and closed per month. This can give you an idea of how frequently bugs are being fixed in relation to how many are being created. Identify the features where bugs are coming from. This can be good to see where bugs are occurring in the application and if there are any trends. Communicating bugs to the team will let the team know that it's important to acknowledge and fix bugs regularly. to acknowledge and fix bugs regularly.

Communicate bugs to the team

- After bugs have been prioritized, communicate details about the status of bugs with the team. Share things like the top priority bugs, Listen to the team to reach consensus that bugs have been prioritized correctly. Clarify the effort and time it will take to fix bugs, and identify who will be able to fix them. To communicate the status of bugs, it can also be nice to have a bug specific project board. The project board can include columns for new, ready for development, in progress, QA, and done. Place box in the appropriate column and keep the status updated. You can also capture analytics about your bugs such as the amount open and closed per month. This can give you an idea of how frequently bugs are being fixed in relation to how many are being created. Identify the features where bugs are coming from. This can be good to see where bugs are occurring in the application and if there are any trends. Communicating bugs to the team will let the team know that it's important to acknowledge and fix bugs regularly. to acknowledge and fix bugs regularly.

Have bug bashes

- It's important for your team to dedicate time to both find and fix bugs. To find bugs, it can be great to have mob testing sessions around particular features in the application. Taking one hour, have a group of teammates get together to test. They can either do exploratory testing of a specific feature to help uncover bugs, or they can do manual testing using a test plan. This method is a bit more guided, and expects testing to follow the defined steps and scenarios. I recommend doing mob testing at least one week before the release date for every major feature, so your team has time to fix any identified issues. Outside of development sprints, have a bug bash. A week where team-members focus entirely on fixing bugs. This allows individuals who don't always fix bugs to dig deeper into the codebase. I recommend having bug bashes two to four times a year. They can be great for your application's health, and fun for your team.

No comments:

Post a Comment